Human language and human culture have run hand-in-hand. Thinking about what it is to be human without language is impossible. From the first utterings of speech around 70,000 years ago, to computers taking dictation and translating speech today, language is fundamental to humanity’s growth as a species.

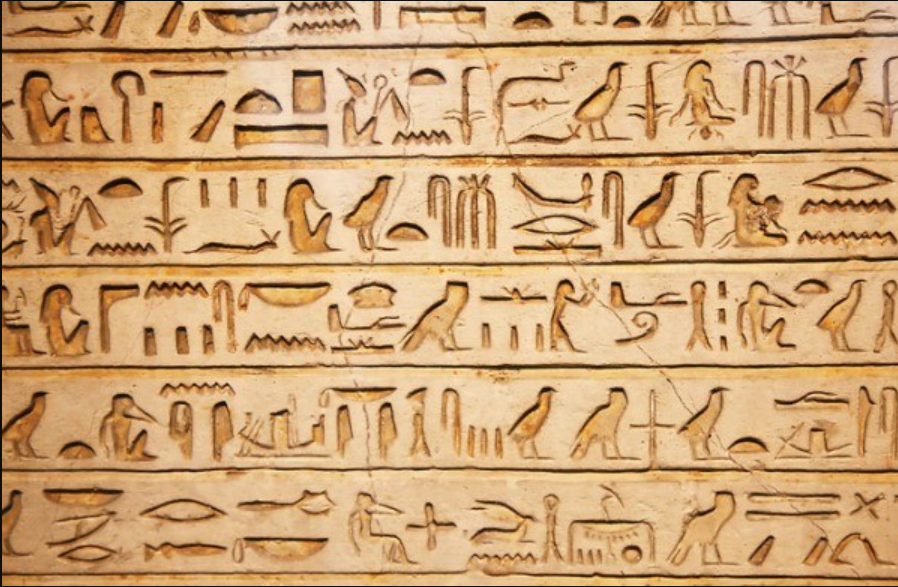

One: Writing Systems

Several great linguistic accomplishments have changed humankind. The first were the writing systems. People wanted to record what was said or known, so that knowledge could be passed onto others. Starting with pictographs, writing systems evolved to symbolic systems that allowed everything spoken to be recorded in a physical form. History now could be preserved.

Two: Printing Press

The second great linguistic revolution was the printing press. Till then, written language was scribed by the human hand, and books were rare and expensive. The knowledge within was limited to a few elites in society, keeping the masses from advancing equally. When Gutenberg perfected the printing press, humanity reached new intellectual heights.

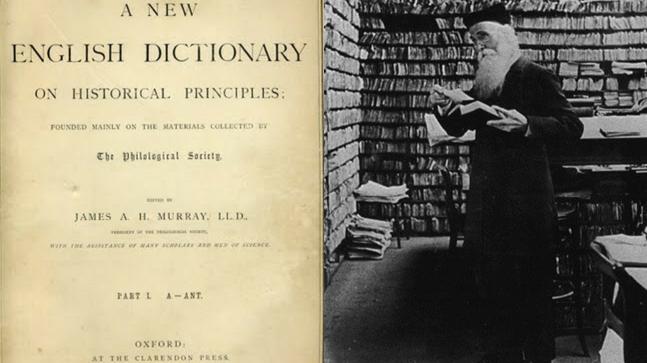

Three: Dictionary

The third linguistic revolution was the dictionary. Preceding that, words and meanings were passed on willy-nilly through generations, and the actual “system” of language was scattered among disparate books. In the nineteenth century, the immense task of creating the first comprehensive dictionaries was undertaken, yielding works such as the Oxford English Dictionary.

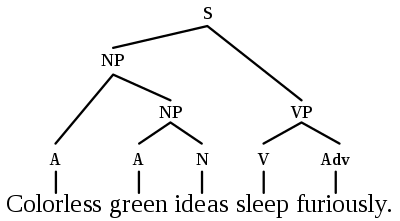

Four: Grammars

The fourth linguistic revolution started in 1957 with Noam Chomsky’s work on formally describing language systems as grammars. Before Chomsky, linguistics focused on historical study and preservation of the languages of the world. When Chomsky published his now famous “Syntactic Structures”, linguistics moved from a polyglot study to scientific theories of language structure and meaning.

Computers

The advent of the computer led to attempts to translate Chomsky’s grammars into rules for computers. Thus were born the areas of computational linguistics and natural language processing (NLP). But such grammars failed due to combinatorial explosion. With the advent of neural networks and machine learning in the early 1990s, it was hoped that computers could learn and use language without having to “hand code” linguistic information. And with the advent of Big Data, NLP systems could train on millions of translated documents and recorded human speech, eventually yielding speech recognition and translation systems.

Yet today, scientists are starting to realize that linguistic and human knowledge cannot be learned automatically by computers using only statistical methods. Language systems are arbitrary and the meaning of words like “mother”, “आमा”, or “母親”cannot be learned automatically by computers. They have to be taught.

Five: Linguistic and World Knowledge to Computers

That leads us to the fifth great linguistic revolution: the passing of linguistic and world knowledge to computers. Analogous to the writing systems, the printing press, and the knowledge of languages as systems, a new computer programming language and system can usher in the fifth great linguistic revolution. NLP++, VisualText, and the Conceptual grammar provide three important ingredients in moving linguistic and human knowledge to computers. Like the dictionary endeavors of the 19th century, the great linguistic knowledge migration to computers has begun in the 21st century. As in the dictionary construction projects of the 1800s, the current migration is to be done manually by humans — with computer assistance. This sounds ludicrous and impossible at first, but once one takes the time to understand this new NLP technology, the path is clear.

Computer Languages for Natural Language

The problem with “hand coding” linguistic and world knowledge into computers and using it was due to the lack of a computer language, knowledge base, and programming tools. Dealing with text, text trees, grammar rules, dictionaries, and knowledge bases using standard programming language is universally accepted by programmers to be impossible.

The first computer programing language to attempt to tackle natural language was “Prolog”, developed in 1972 where grammar rules could be described for language and Prolog would use those rules to parse automatically process text. The problem was that many different parse trees could often be found for the same text and parsing text using comprehensive rules became combinatorially impossible with computers taking minutes and hours to find possible parses.

With no computer technology to build intelligent NLP systems, computational linguists turned to the statistical methods of machine learning and neural networks. From the early 1990s to today, machine learning and neural networks have seen practical applications in machine translation and speech recognition. Yet writing intelligent computer programs to read and “understand” text was still far off.

At the turn of the millennium, a computer technology was created that offered programmers a way to write intelligent programs and migrate linguistic and world knowledge to computers. The programming language NLP++, the Conceptual Grammar knowledge base, and a programming tool called VisualText came on the scene. It was not until 20 years later that this technology would start gaining the attention of universities and industries alike.

NLP++

NLP++ is a programming language that includes automatically breaking text into tokens, has rules to organize text into trees, and functions to use and create knowledge bases. NLP++ is not a grammar language like Progog, but a full-blown computer language and Turing machine. It is bottom up, island driven parsing which does exhibit the combinatorial problems of Prolog. Being a real computer language, any type of linguistic analyzer can be written. But biggest real power is its ability to build and use knowledge on the fly while parsing language while also being able to use pre-stored knowledge as well.

Conceptual Grammar

NLP++ has data types and functions to create and use knowledge in a knowledge based called the Conceptual Grammar (CG). CG is a hierarchical knowledge base system capable of housing linguistic and conceptual information. Knowledge can be created, ingested into a system, and used to understand text. NLP++ analyzers also create knowledge directly from the text which can be used to generate new knowledge and understand the very text it is processing.

VisualText

Finally, the most important part of this technology is VisualText. Just like those compiling the first comprehensive dictionary, those facing today’s migration of linguistic and human knowledge into computers are facing the task of doing this “by hand”. The good news is that the VisualText IDE (integrated development environment) is indispensable in supporting and accelerating this endeavor.

Building linguistic and human knowledge is a visual task. Syntactic trees, hierarchical knowledge – all of which is understood easily when displayed clearly. VisualText not only clearly displays knowledge, it creates many interconnections between the tree, the text, and knowledge.

VisualText also helps create text analyzers that can themselves build the linguistic and knowledge needed for this migration to computers. It is extremely common for programmers create numerous text analyzers in the pursuit of creating a human digital reader, including pulling knowledge from semi-formatted text such as Wiktionary pages.

Conclusion

The fifth linguistic revolution shares two common traits with dictionary-building in the 19th century: mass participation and a common medium. In the 19th century, the medium was the physical book with pages that could be mass-produced. The effort to gather information about words was spread via newspapers to contributors around the globe. The effort involved thousands of people.

It will take hundreds or even thousands of people to migrate linguistic and human knowledge to computers. Currently, a dozen or more people are engaged in this process. But as the movement grows and dozens turn into hundreds and hundreds into thousands, the task can be accomplished.

And it is the common medium of NLP++, VisualText, and the Conceptual grammar that allows everyone to work together toward the goal of creating the linguistic, world, and algorithmic knowledge needed to migrate knowledge to computers — a project of vital importance, enabling computers to assist us in the arduous tasks of reading text and understanding it like humans.

![]()